AI with a human touch: regulation for co-creation

Since the welcoming speech by the host and chair of Brainport AI Hub, Carlo van de Weijer, it was clear that AI is not coming to replace humans. Instead, he says, “Humans that can work with AI will replace humans who don’t.”

Highlights:

- This year’s edition of the AI Summit Brainport focused on human-centric AI.

- With regulation coming into place, AI can align with human values.

- AI-human co-creation can yield a new understanding of the world.

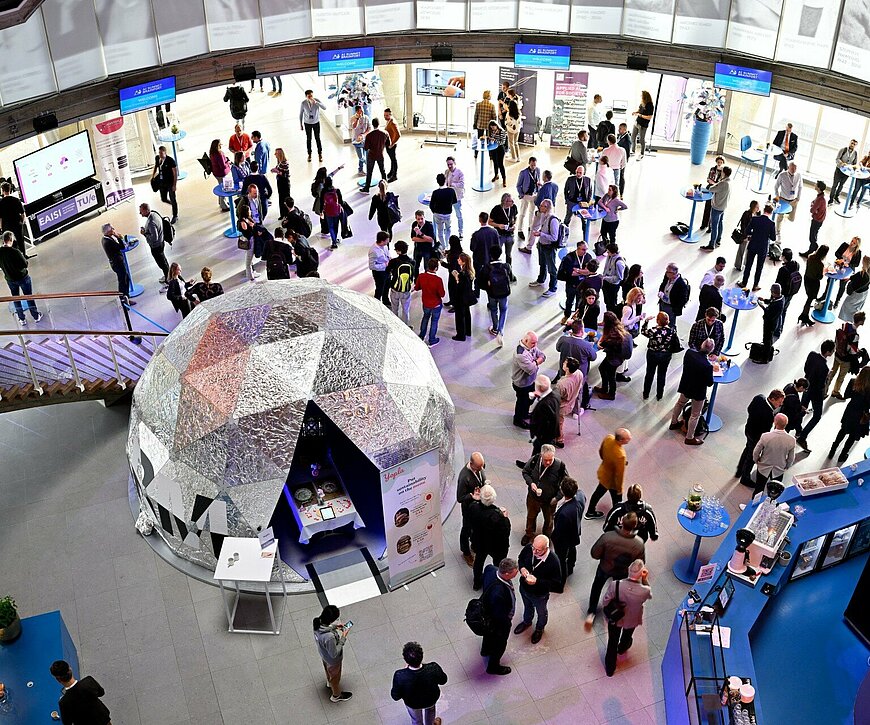

The 2023 AI Summit Brainport took place on November 2 in the iconic – but not AI-generated – venue of the Evoluon in Eindhoven. The summit was organized by Eindhoven University of Technology’s (TU/e) EAISI – an acronym for Eindhoven AI Systems Institute – the province of Noord Brabant, and the AI-hub Brainport. Human-centered AI was the event’s focus, a topic discussed in several presentations, workshops, and panels throughout the day.

AI applications are surging amid concerns over the ruling the technology should have. To this extent, this week, G7 countries agreed on establishing an AI code of conduct before the first global summit on AI hosted by the UK. Besides, US President Joe Biden also issued an executive order regulating the promises of AI and managing its risks. The European Union previously released its own AI act, introducing a risk-based approach to regulating the technology.

Language matters

In a thought-provoking keynote by Cognition and Affect in Human-Technology Interaction professor Wijnand IJsselstein, the metaphors commonly used in describing AI were questioned. Machine learning – a technique to make AI learn by experience – has nothing to do with human learning, for instance. “A machine doesn’t take any initiative to learn; it doesn’t contextualize, has no subjectivity nor curiosity,” he said.

A similar debunking was done to deep learning, an expression to define a subset of machine learning simulating the human brain with multiple neural network layers. “It implies some depth of thought, wisdom, a deep understanding of things, which, however, requires meaningful connections.” Overall, using human attributes yields epistemic confusion, according to the professor. “Language shapes our understanding of AI,” stressed the academic. In turn, the researcher believes AI can improve our understanding of the world and better grasp it in different ways.

Another example brought by IJsselstein is Gardner’s theory of multiple intelligences. According to this theory, humans have eight different kinds of intelligence, such as intrapersonal, visual-spatial, or musical. Individuals often have one of them more developed than the others. While AI can replicate, to some extent, the logical-mathematical one, intelligence like the spatial one can’t (yet) be reproduced.

Enforcing control

Regulating AI sets boundaries and fosters the development of human-centered technology. Following an introduction to the AI Act by Susan Hommerson from TU/e’s policy officer research, a panel discussed the regulation. What if we don’t regulate it at all? “It might go wrong; we need to normalize AI just as we do with toys or medical devices,” said Koen Holtman, a Systems Architect and AI safety expert.

NXP Semiconductors Global Privacy Officer Wouter van der Loop also underscored the need to put some control on the technology, defining responsibility and accountability, as well as ways to use it. Jelle Donders, founder of the Eindhoven AI Safety Team, believes the EU law provides a good approach towards regulating individual and collective risks, while it falls short of addressing societal ones. “It’s not obvious that we will be in control,” he said.

Hybridization

The human brain has unmatched capabilities. AI can tirelessly process information but does so at the expense of thousands of megawatts of power daily in an effort to replicate what our brain does with no more than twenty watts. Is it possible to combine the two to create the perfect brain?

The TU/e’s BayesBrain project was presented in a round of AI research presentations. BayesBrain is attempting to create the world’s first brain-on-chip AI computer. The goal is to create a hybrid computer, as highly programmable as a chip can be while being as energy-efficient as our neurons are. TU/e scientists envision combining cutting-edge chips with cultivated cells obtained through stem cell tech.

Hybrid governance is the research focus of Professor Frauke Behrendt. Specifically, she studied the use of AI to enable sustainable mobility, particularly in mobility as a service (MAAS). In delving into the underlying dynamics behind decision-making processes. What if AI becomes part of the process? The professor believes that hybrid governance could be achieved with policymakers aware of the possibilities brought by AI and leveraging it for implementing sustainability, for instance.

Joining forces

If human values are to remain the cornerstone of our future, how should we steer development in a way that AI systems care about what we care about? In Diego Morales’s view, metacognition is the key. Metacognition is defined as the practice of being aware of one’s own thinking and using it to plan, monitor, and evaluate one’s learning or problem-solving. The risk of not handling it properly might result in an AI that selects and prioritizes things we care about.

Risks connected to AI are evident to anyone. While stopping its development is out of the question, regulation needs to step in to set boundaries and protect from the dangerous drifts AI can generate. Co-creation might be the way.